Pulling data from Hadoop and Publishing to Socrata

-

- Posted: 28 Oct 2016

- Category: blog

- Tagged: examples, publishers, open source, and hadoop

-

Related Posts

- A Move to Main Branch

- Peter Moore on 13 Oct 2021

- Time Series Analysis with Jupyter Notebooks and Socrata

- rlvoyer on 07 Oct 2019

- Continual Improvement : CI / CD at Tyler Technologies, Data & Insights Division

- JoeNunnelley on 26 Sep 2019

This example shows how to pull data from a Hadoop (HDFS) instance and load into Socrata.

- Read data from a local HDFS instance. For this example, Hadoop will be run locally, but you could easily pull data from an HDFS cluster as well.

- Save it to a local temp directory

- Publish it to Socrata

Prerequisites on Hadoop

Hadoop (HDFS) The Apache Hadoop software library is a framework that allows for the distributed processing of large data sets across clusters of computers using simple programming models. It is designed to scale up from single servers to thousands of machines, each offering local computation and storage. Rather than rely on hardware to deliver high-availability, the library itself is designed to detect and handle failures at the application layer, so delivering a highly-available service on top of a cluster of computers, each of which may be prone to failures.

You can install Hadoop either locally or on a cloud service like Amazon EC2:

- For this example, we chose to install Hadoop on macOS Sierra using this guide

- If you don’t have a macOS or *nix machine, you can also install Hadoop and HDFS on EC2

This example uses a local instance with one namenode and one datanode but is designed to be distributed.

Before continuing on, please follow the link, download, and configure whichever instance you will be running.

Getting data from HDFS

Locate your file

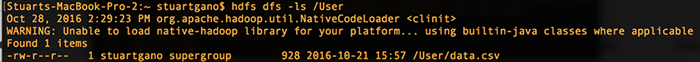

The first step is list the contents of your directory in HDFS to locate your file:

hdfs dfs -ls /User

Download your data file

Download your data file from the HDFS filesystem system and copy it to local directory:

hdfs dfs -get data.csv /tmp Publish to Socrata using DataSync

Once you have your file locally, you can publish it via Socrata DataSync, just like any other data file.

Clean up after yourself

After you’re done, don’t forget to clean up the data file you downloaded:

rm /tmp/data.csv